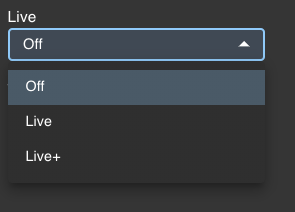

Data & Settings, Deep Agent, & MCP

Unlock advanced AI capabilities with datasets, intelligent agents, and powerful integrations!

Table of Contents

Data & Settings

Ask Sage Datasets

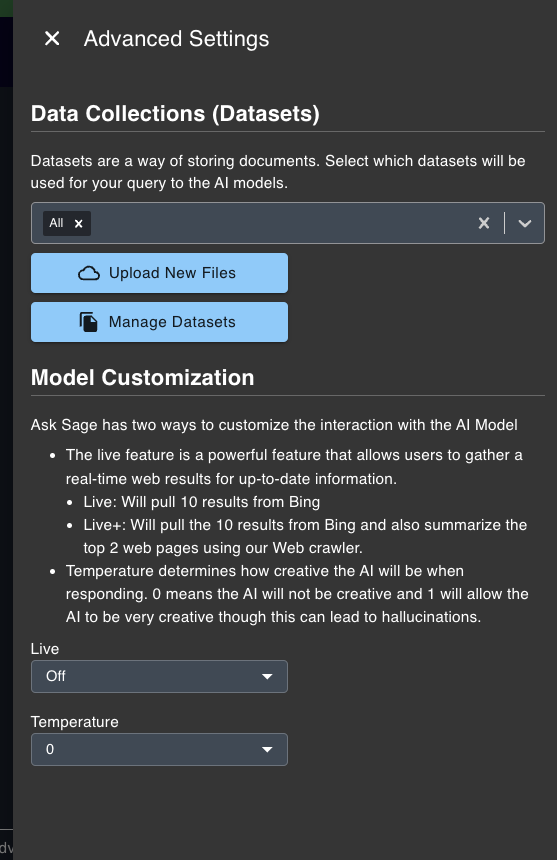

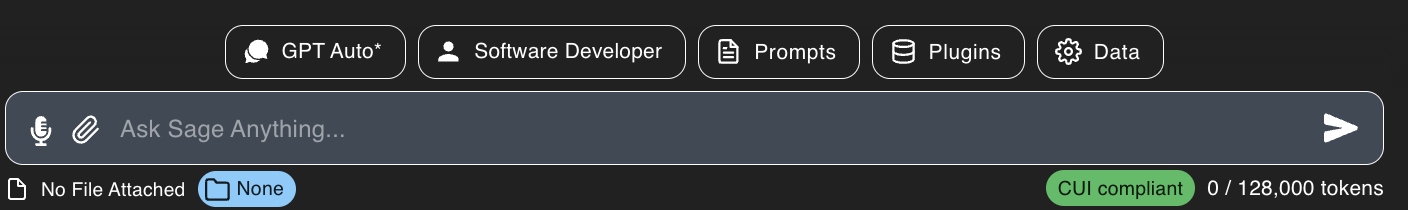

Dataset selection is also available via the Data & Settings button. Select one, multiple or all the datasets you want the prompt to reference and generate text from.

The dataset feature allows users to generate text from a specific dataset. This is important since GenAI models are trained on data that is locked in time and may not have the most up-to-date information. By selecting a dataset, you can ensure that the model generates text based on the most current information you want to reference.

How Does it Work?

The dataset feature in Ask Sage Chat enables users to select one or multiple datasets. This process utilizes Retrieval-Augmented Generation (RAG) to extract the most relevant information from the chosen dataset(s) and generate responses based on that data.

What is RAG?

RAG enhances the GenAI process by retrieving pertinent external context and integrating it into the original prompt. This involves two key steps:

Unclassified or CUI. Other tenants may offer additional classification options. Note: To utilize the

CUI label, users must possess a CAC/PIV card or request activation for CUI classification if you do not have a CAC/PIV Card. To request this feature, please email support@asksage.ai. (Access is not granted automatically and follow on instructions will be provided to you via email.) none option. Web Search

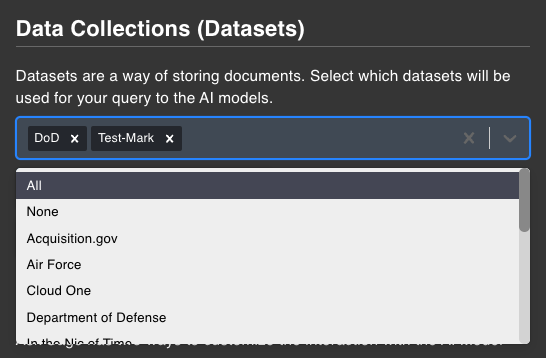

The Web Search feature can be accessed via the Data & Settings button, offering three options: off, Live, and Live+. By default, the live feature is set to off. When the Web search toggle is activated, it automatically switches the live feature to Live+ mode.

The live feature allows users to generate a real-time web search and return up-to-date information in the inferences generated by the model.

Live feature is not CUI/Sensitive data compliant as it is using a Search Engine to pull information from the web, and we can not control what information is being collected by the search engine. Temperature

The temperature parameter is available via the Data button.

Adjust the temperature of the model. By default, the temperature is set to 0.0.

The temperature parameter regulates the randomness of the model's responses. A lower temperature results in more deterministic and consistent outputs, while a higher temperature introduces greater variability and creativity. Consequently, while a higher temperature can lead to more imaginative responses, it may also result in less coherent or structured outputs.

0.0 for most use cases, as this will provide the most accurate and relevant results. However, if you are looking for more creative or varied responses, you can increase the temperature to 0.5 or 1.0. Deep Agent

We are thrilled to introduce Deep Agent, a groundbreaking feature in the Ask Sage GenAI platform. Deep Agent is the first AI agent capable of conducting searches across both the web and your secure datasets, including Retrieval-Augmented Generation (RAG) sources. This innovation empowers users to perform advanced research and analysis with unprecedented ease and precision.

How Deep Agent Works

Deep Agent leverages advanced AI agents to iteratively perform complex research tasks. It automatically generates queries and prompts, seamlessly exploring the web, your private datasets, or both. This capability enables users to uncover deeper insights and achieve higher productivity.

Token Usage Guidelines

Each Deep Agent query initiates multiple prompts, which can consume a significant number of tokens. To optimize your experience, select your models carefully. By default, Deep Agent uses the 4o model, which balances cost-effectiveness and performance.

- A single Deep Agent query may generate dozens of prompts, depending on the complexity of the task.

- For example, a query analyzing both web and RAG data might consume 500–1,000 tokens per session.

Beta Mode: Continuous Improvement

Deep Agent is currently in beta mode, and we are actively refining its performance. Your feedback is invaluable in helping us improve results and enhance automation.

Model Context Protocol (MCP)

The Model Context Protocol (MCP) is a powerful framework designed to enable seamless interaction between users and AI models. MCP provides a structured way to define, manage, and execute actions or tools within a given context. By leveraging MCP, users can extend the capabilities of AI models to perform specific tasks, automate workflows, and integrate external systems or APIs.